Ajax Caching: Two Important Facts

![]() August 7, 2009 in

Caching , Firefox , HTTP , HttpWatch , Internet Explorer

August 7, 2009 in

Caching , Firefox , HTTP , HttpWatch , Internet Explorer

Ajax calls are just like any other HTTP request that might be used to build a web page. However, due to their dynamic nature people often overlook the benefit of caching them.

Rule 14 of High Performance Web Sites states:

Make Ajax Cacheable

Make sure your Ajax requests follow the performance guidelines, especially having a far future Expires header.

The rest of this blog post covers two important facts that will help you understand and effectively apply caching to Ajax requests.

Fact #1 : Ajax Caching Is The Same As HTTP Caching

The HTTP and Cache sub-systems of modern browsers are at a much lower level than Ajax’s XMLHttpRequest object. At this level, the browser doesn’t know or care about Ajax requests. It simply obeys the normal HTTP caching rules based on the response headers returned from the server.

If you know about HTTP caching already, you can apply that knowledge to Ajax caching. The only real difference is that you may need to setup response headers in a different way to static files.

The following response headers are used to make your Ajax cacheable:

- Expires: This should be set to an appropriate time in the future depending on how often the content changes. For example, if it is a stock price you might set an Expires value 10 seconds in the future. For a photograph, you might set a far futures Expires header because you don’t ever expect it to change. The Expires header allows the browser to reuse the cached content for a period of time and avoid any unnecessary round-trips to the server.

- Last-Modified: It’s a good idea to set this so that the browser can use an If-Modified-Since header in a conditional GET request to check its locally cached content. The server would respond with a 304 status code if the data doesn’t require an update.

- Cache-Control: If appropriate, this should be set to ‘Public’ so that intermediate proxies and caches can store and share the content with other users It will also enable caching of HTTPS requests on Firefox.

Of course, this doesn’t apply if you use the POST method in your Ajax requests, because POST requests are never cached. You should always use the POST method if your Ajax request has side effects, e.g. moving money between bank accounts.

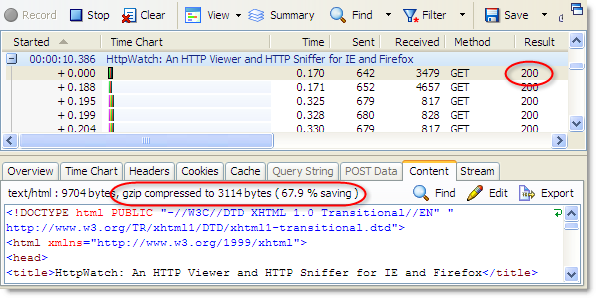

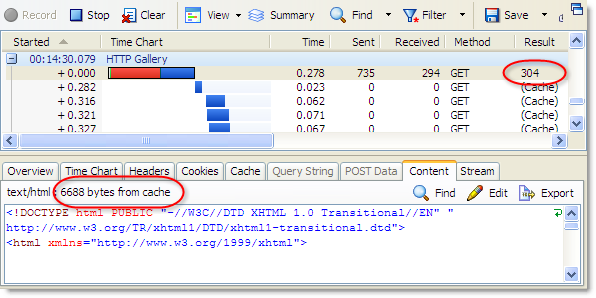

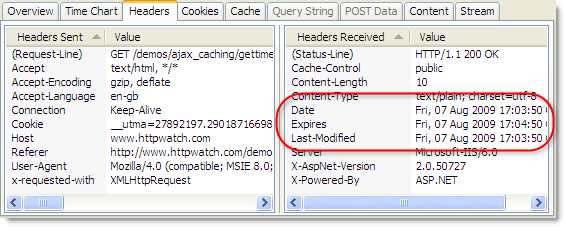

We’ve setup a Ajax caching demo that shows these headers in action. In HttpWatch, you can see that we’ve set all three of these headers in the Ajax response:

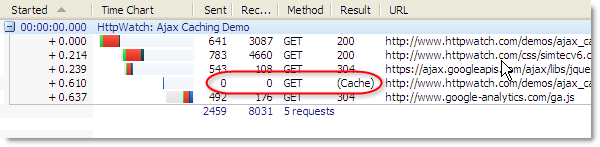

If you click on the ‘Ajax Update’ button at regular intervals, the time only changes approximately once a minute because the Expires header is set to one minute in the future. In this HttpWatch screenshot you can see that repeated clicks of the update button cause Ajax requests that read directly from the browser cache and result in no network activity (i.e. the value in the Sent and Received columns is zero bytes) :

The final click at 1:06.531 does result in an Ajax request that requires a network round-trip, because the cached data is now more than one minute old. The 200 response from the server indicates that a fresh copy of the content was downloaded.

Fact #2: IE Doesn’t Refresh Ajax Based Content Before Its Expiration Date

Sometimes Ajax is used at load time to populate sections of a page (e.g. a price list). Instead of being triggered by a user event such as a button click, it is directly called from the Javascript that runs when the page is loaded. This makes the Ajax call behave as if it were a request for an embedded resource.

As you develop a page like this, it is tempting to refresh the page in an attempt to update the embedded Ajax content. With other embedded resources such as CSS or images, the browser automatically sends the following types of requests depending on whether F5 (Refresh) or Ctrl+F5 (Forced Refresh) is used:

- F5(Refresh) causes the browser to build a conditional update request if the content originally had a Last-Modified response header. It uses the If-Modified-Since request header so that server can avoid unnecessary downloads where possible by returning the HTTP 304 response code.

- Ctrl+F5 (Forced Refresh) causes the browser to send an unconditional GET request with a Cache-Control request header set to ‘no-cache’. This indicates to all intermediate proxies and caches that the browser needs the latest version of the resource regardless of what has already been cached.

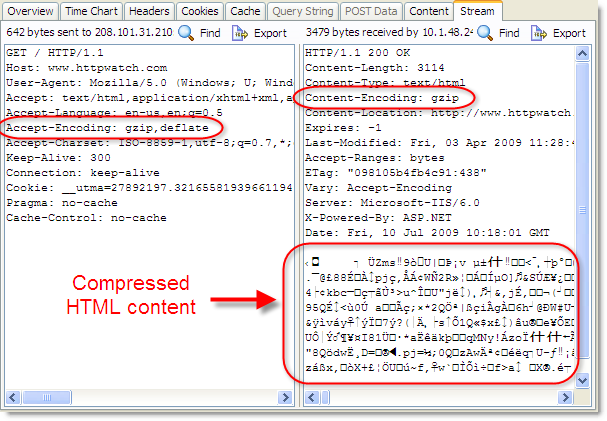

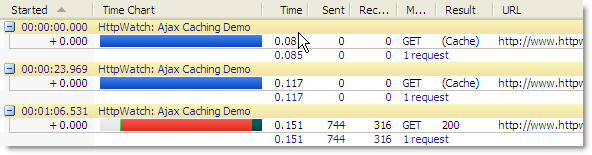

Firefox propagates the type of refresh down to any Ajax request that is made during the loading of the page and will therefore update any Ajax derived content as if it were an embedded resource. This screen shot of the HttpWatch plugin-in for Firefox shows the effect of refreshing our Ajax Caching demo page:

Firefox ensured that the Ajax request was issued as a conditional GET. The server responds with a 304 in our demo if the cached data is less than 10 seconds old or a 200 response with the updated content if it is out of date.

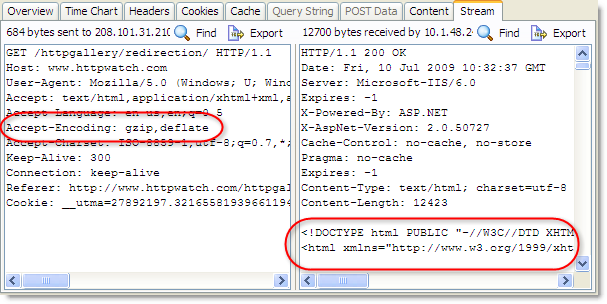

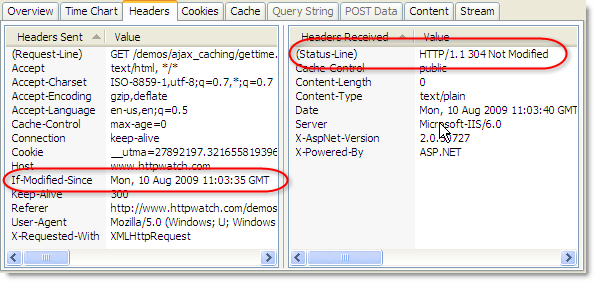

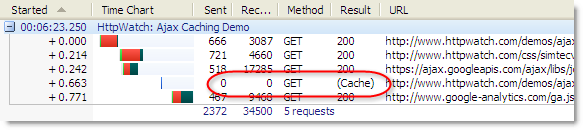

In Internet Explorer, the load-time Ajax request is treated as though it is unrelated to the rest of the page refresh and there is no propagation of the user’s Refresh action. No GET request is sent to the server if the cached Ajax content has not yet expired. It simply reads the content directly from the cache, resulting in the (Cache) result value in HttpWatch. Here’s the effect of F5 in IE before the content has expired:

Even with Ctrl+F5, the Ajax derived content is still read from the cache:

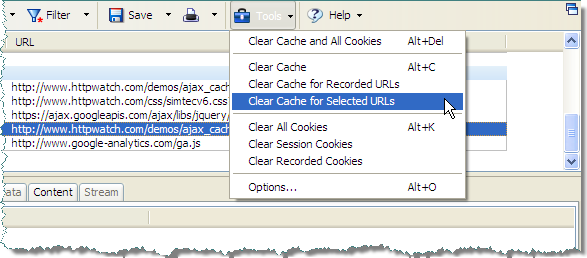

This means that any Ajax derived content in IE is never updated before its expiration date – even if you use a forced refresh (Ctrl+F5). The only way to ensure you get an update is to manually remove the content from the cache. In HttpWatch, you can do this using the Tools menu: