How Secure Are Query Strings Over HTTPS?

A common question we hear is “Can parameters be safely passed in URLs to secure web sites? ” The question often arises after a customer has looked at an HTTPS request in HttpWatch and wondered who else can see this data.

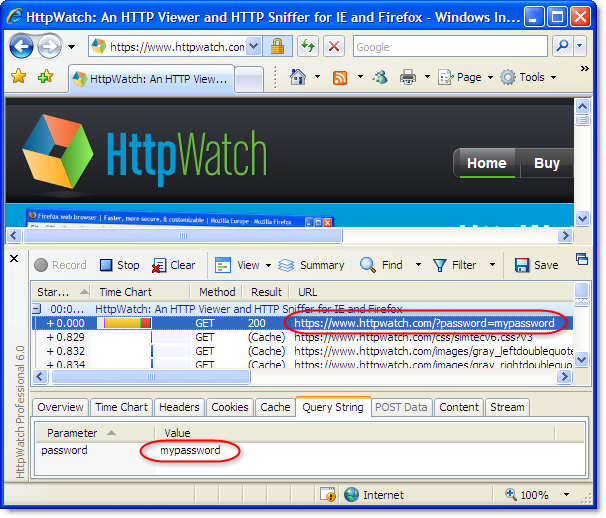

For example, let’s pretend to pass a password in a query string parameter using the following secure URL:

https://www.httpwatch.com/?password=mypassword

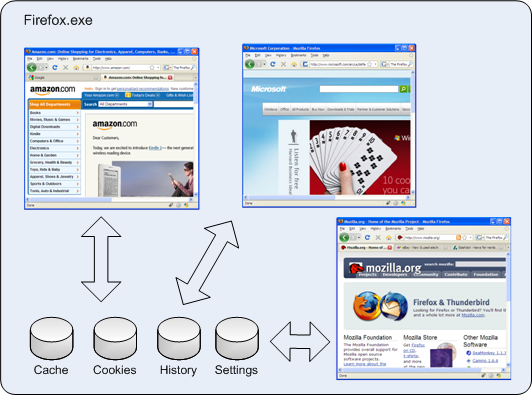

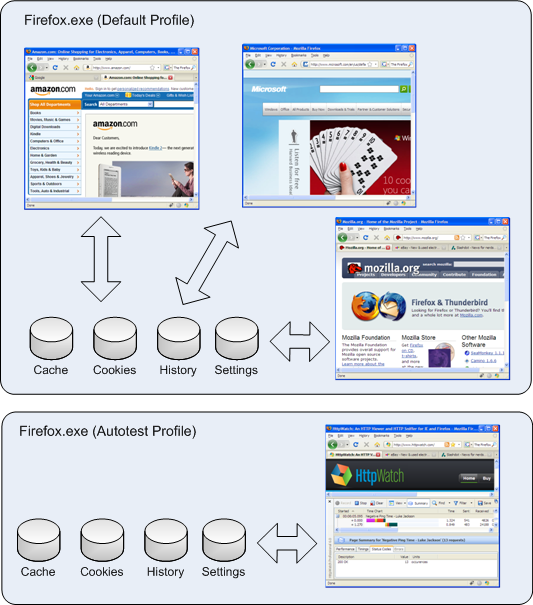

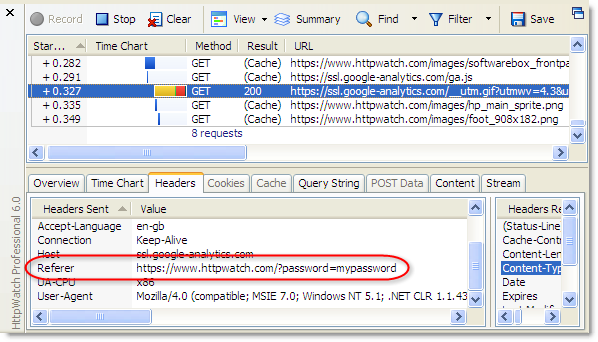

HttpWatch is able to show the contents of a secure request because it is integrated with the browser and can view the data before it is encrypted by the SSL connection used for HTTPS requests:

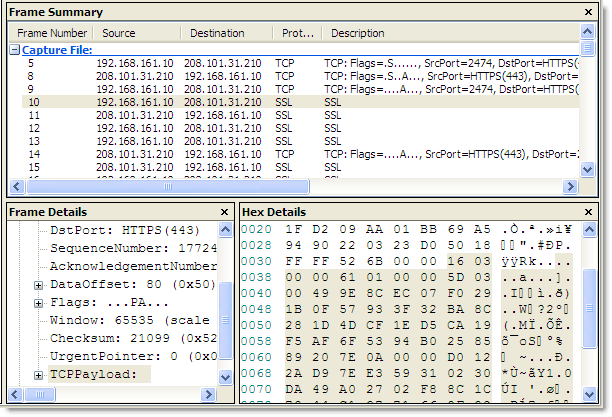

If you look in a network sniffer, like Network Monitor, at the same request you would just see the encrypted data going backwards and forwards. No URLs, headers or content is visible in the packet trace::

You can rely on an HTTPS request being secure so long as:

- No SSL certificate warnings were ignored

- The private key used by the web server to initiate the SSL connection is not available outside of the web server itself.

So at the network level, URL parameters are secure, but there are some other ways in which URL based data can leak:

- URLs are stored in web server logs – typically the whole URL of each request is stored in a server log. This means that any sensitive data in the URL (e.g. a password) is being saved in clear text on the server. Here’s the entry that was stored in the httpwatch.com server log when a query string was used to send a password over HTTPS:

2009-02-20 10:18:27 W3SVC4326 WWW 208.101.31.210 GET /Default.htm password=mypassword 443 ...

It’s generally agreed that storing clear text passwords is never a good idea even on the server.

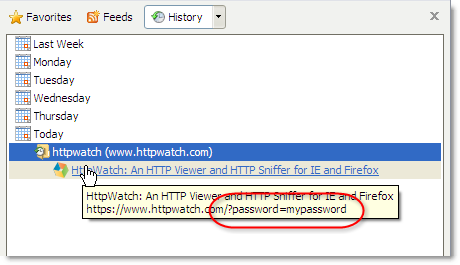

- URLs are stored in the browser history – browsers save URL parameters in their history even if the secure pages themselves are not cached. Here’s the IE history displaying the URL

parameter:

Query string parameters will also be stored if the user creates a bookmark.

- URLs are passed in Referrer headers – if a secure page uses resources, such as javascript, images or analytics services, the URL is passed in the Referrer request header of each embedded request. Sometimes the query string parameters may be delivered to and stored by third party sites. In HttpWatch you can see that our password query string parameter is being sent across to Google Analytics:

Conclusion

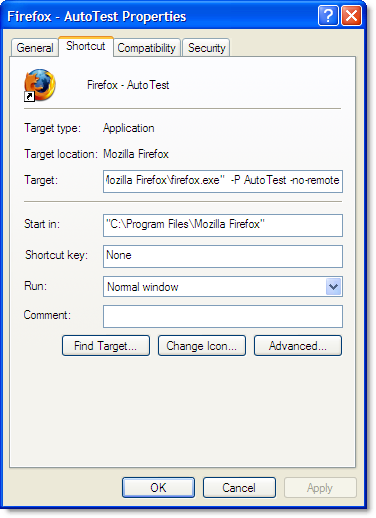

The solution to this problem requires two steps:

- Only pass around sensitive data if absolutely necessary. Once a user is authenticated it is best to identify them with a session ID that has a limited lifetime.

- Use non-persistent, session level cookies to hold session IDs and other private data.

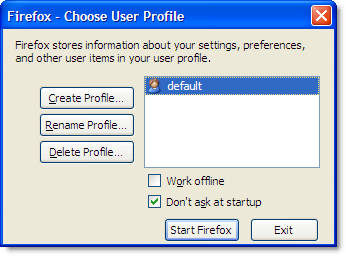

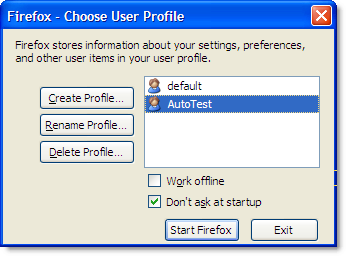

The advantage of using session level cookies to carry this information is that:

- They are not stored in the browsers history or on the disk

- They are usually not stored in server logs

- They are not passed to embedded resources such as images or javascript libraries

- They only apply to the domain and path for which they were issued

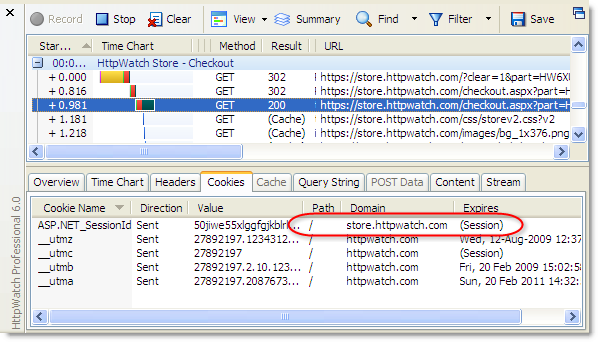

Here’s an example of the ASP.NET session cookie that is used in our online store to identity a user:

Notice that the cookie is limited to the domain store.httpwatch.com and it expires at the end of the browser session (i.e. it is not stored to disk).

You can of course use query string parameters with HTTPS, but don’t use them for anything that could present a security problem. For example, you could safely use them to identity part numbers or types of display like ‘accountview’ or ‘printpage’, but don’t use them for passwords, credit card numbers or other pieces of information that should not be publicly available.